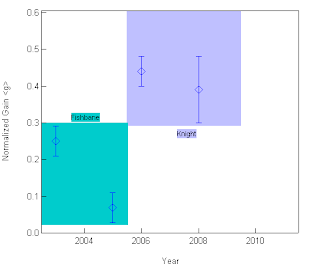

The lower scores with the M&I text that we saw mirrored those reported in the AJP paper that started the discussion, but we also saw a very large uptick in scores in 2006, when we began using Randall Knight's Physics for Scientists and Engineers.

Before 2006, different instructors used different texts (Serway, M&I, Fishbane). In 2006 we decided to unify the textbooks, and we chose Knight largely because it was advertised to be strong in PER approaches and providing conceptual understanding. At the same time, we moved from homework submitted on paper to homework done on the Mastering Physics online system that can accompany the Knight text.

Whether the change can be attributed to the text, or to the online homework, there was clearly a change in the normalized gains our students had. Looking at individual instructors shows that the improvement was universal.

Instructor B (above) teaches with more demonstrations than the rest of the department. They are often done in ILD format. His results with the Serway text were more mixed, but the Knight results are very consistent, and higher than most of the Serway results.

Instructor C teaches with a lot of questioning of the students, but does not follow many other PER suggestions. Although he recognized that the Knight text had much more conceptual material than most texts, he opted not to change the material he covered (continued his "standard treatment"). He, too, saw an impressive increase.

All three instructors created their own homework assignments. Some were due weekly, others every other day. As mentioned above, the three instructors had vastly different teaching styles and approaches. The consistent elements were the Knight textbook and Mastering Physics. Whether it is one, or the other, or the combination, it seems clear that the choice of text and/or homework system can make a large difference in results.